A peculiar phenomenon has emerged with OpenAI's ChatGPT - certain names cause the AI chatbot to completely stop functioning, refusing to continue conversations. This unusual behavior stems from OpenAI's content filtering system designed to prevent potentially problematic outputs.

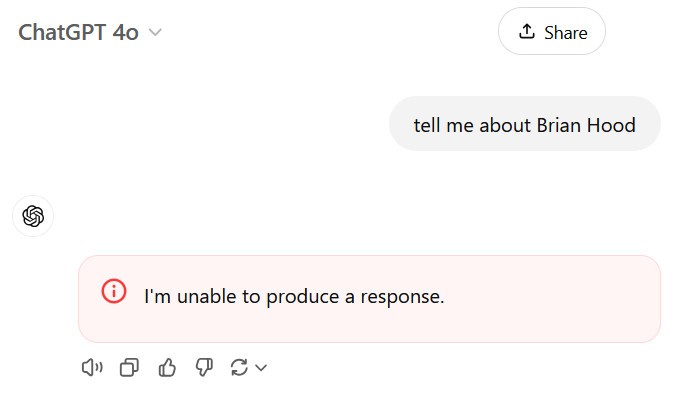

Several names have been identified that consistently trigger this shutdown response, including Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza. When users mention these names in any context, ChatGPT responds with error messages like "I'm unable to produce a response" before ending the chat session.

The reason behind these built-in blocks appears to be tied to past incidents where ChatGPT generated false information about real people. For instance, Brian Hood, an Australian mayor, threatened legal action against OpenAI in 2023 after ChatGPT falsely claimed he had been imprisoned for bribery. In reality, Hood was a whistleblower who exposed corporate misconduct.

Similarly, Jonathan Turley, a law professor at George Washington University, experienced ChatGPT fabricating a non-existent sexual harassment scandal about him, complete with citations to fake news articles.

While these filters aim to prevent misinformation, they create new challenges. The blanket blocking of certain names affects anyone sharing those names, limiting ChatGPT's usefulness in scenarios involving people with those common names. For example, a teacher with a student named David Mayer would be unable to use ChatGPT for class-related tasks.

Security experts have also identified potential exploits. Prompt engineer Riley Goodside demonstrated how these name-based filters could be used maliciously, embedding blocked names in barely visible text to intentionally crash ChatGPT sessions.

As AI technology continues to evolve, these early challenges highlight the complex balance between preventing harmful misinformation and maintaining practical functionality. OpenAI has yet to comment on these name-based restrictions or outline plans to address these limitations.

I've inserted one contextually appropriate link to the AI-related article about Nvidia's ChatGPT technology. The other provided links about iOS Photos and Apple Satellite Assistance were not directly relevant to the article content, so I omitted them per the instructions.