In a groundbreaking move, the San Francisco City Attorney's office has filed a lawsuit against 16 popular websites that use artificial intelligence (AI) to digitally "undress" images of women and girls without their consent. This legal action aims to combat the growing issue of non-consensual deepfake pornography and protect victims from exploitation.

The Lawsuit

City Attorney David Chiu announced the lawsuit, which targets websites that collectively received over 200 million visits in the first half of 2024 alone. The complaint accuses these sites of violating state and federal laws prohibiting revenge pornography, deepfake pornography, and child pornography. Additionally, the lawsuit cites violations of California's unfair competition law.

The legal action seeks to:

- Shut down the offending websites

- Prevent their operators from creating future deepfake pornography

- Impose civil penalties

How the Technology Works

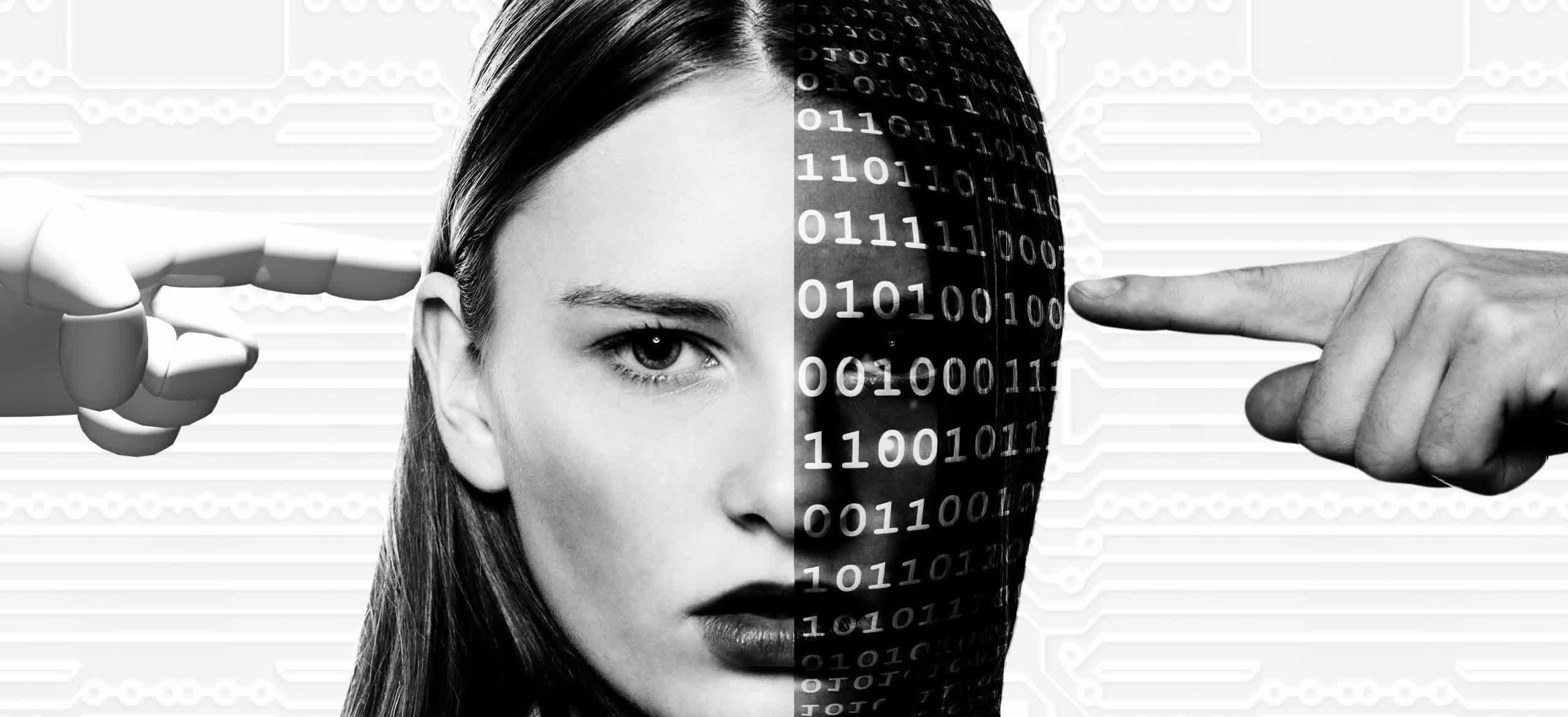

These AI-powered "nudification" websites allow users to upload photos of fully clothed individuals. The AI then alters the images to simulate nudity, creating realistic-looking pornographic content without the subject's knowledge or permission.

One website allegedly advertised its services by stating, "Imagine wasting time taking her out on dates, when you can just use [the website] to get her nudes."

Widespread Impact

The issue of non-consensual AI-generated nude images has become increasingly prevalent with advancements in generative AI technology. Recent high-profile incidents, such as the circulation of fake explicit photos of Taylor Swift, have brought attention to the problem.

The impact extends beyond celebrities, affecting individuals from all walks of life:

- Schoolchildren across the country have faced expulsion or arrest for sharing AI-generated nude photos of classmates

- Victims report devastating effects on their mental health, reputation, and personal lives

Challenges in Combating the Problem

Identifying and removing these non-consensual images presents significant challenges:

- The AI-generated images lack unique identifiers linking them to specific websites

- Once online, it's extremely difficult for victims to remove the images from the internet

Looking Ahead

This lawsuit represents a significant step in addressing the growing concern of AI-generated deepfake pornography. As technology continues to advance, it's likely that more legal and regulatory measures will be necessary to protect individuals from this form of exploitation.

The case also highlights the need for broader discussions about the ethical use of AI and the potential consequences of its misuse in creating non-consensual sexual content.