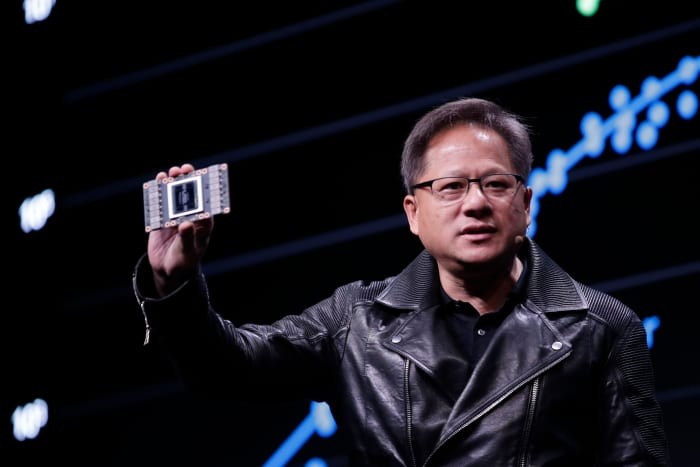

In a groundbreaking revelation at CES in Las Vegas, Nvidia CEO Jensen Huang declared that his company's AI chips are advancing at a pace that surpasses Moore's Law, the long-standing benchmark for computing progress.

"Our systems are progressing way faster than Moore's Law," Huang stated in a recent interview, highlighting how Nvidia's latest datacenter superchip demonstrates performance gains that dwarf traditional advancement rates.

Moore's Law, established by Intel co-founder Gordon Moore in 1965, predicted that computer chip transistor counts would double annually, effectively doubling performance. While this principle guided technological advancement for decades, Nvidia claims to have broken new ground.

The company's newest GB200 NVL72 datacenter superchip showcases this acceleration, delivering speeds 30 to 40 times faster than its predecessor for AI workloads. This remarkable jump in performance could reshape the economics of AI computing, particularly for resource-intensive applications.

Huang attributes this rapid advancement to Nvidia's comprehensive approach: "We can build the architecture, the chip, the system, the libraries, and the algorithms all at the same time," allowing innovation across multiple technological layers simultaneously.

The CEO points to three key areas driving AI scaling: pre-training, post-training, and test-time compute. These components work together to enhance AI model capabilities while potentially reducing operational costs over time.

Looking back, Huang notes that Nvidia's AI chips have become 1,000 times more powerful compared to a decade ago - a pace that substantially outstrips Moore's Law's traditional doubling pattern. This acceleration comes at a critical time when major AI labs like Google, OpenAI, and Anthropic rely heavily on Nvidia's technology for their AI development.

The implications of these advancements extend beyond raw performance metrics. As AI models become more sophisticated and compute-intensive, Nvidia's improvements in chip technology could make advanced AI applications more accessible and cost-effective for widespread use.

While some industry observers question whether AI progress has plateaued, Huang remains bullish on continued advancement, suggesting that improved chip performance will drive down computing costs just as Moore's Law did in previous decades.