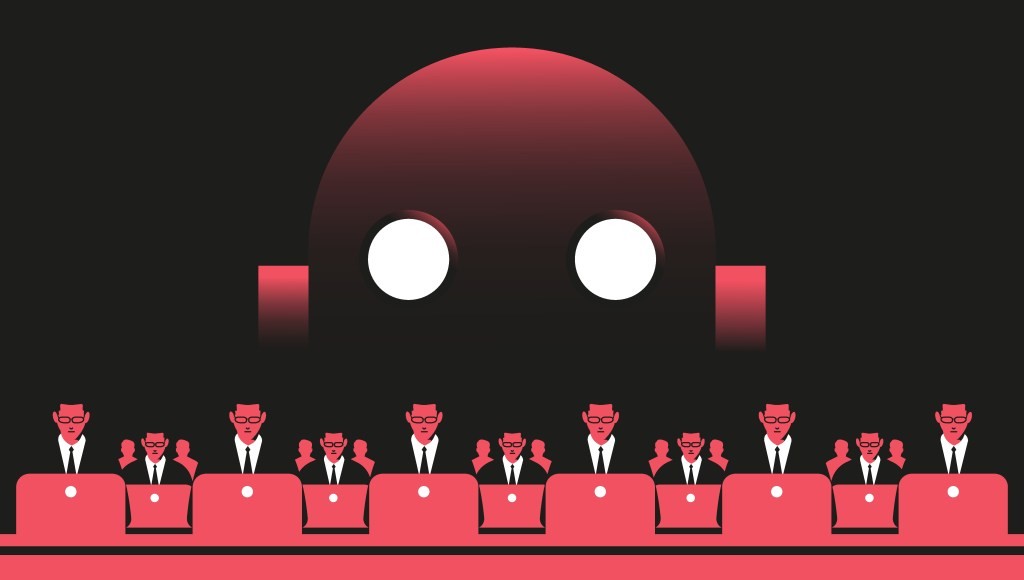

After a year of dire warnings about artificial intelligence in 2023, Silicon Valley successfully pushed back against the "AI doom" narrative in 2024, redirecting public attention toward AI's business potential while sidelining safety concerns.

The tech industry's counteroffensive was led by prominent venture capitalist Marc Andreessen, who published an influential essay titled "Why AI will Save the World." The piece argued forcefully for rapid, unrestricted AI development and minimal regulation - a stance that aligned with Silicon Valley's commercial interests.

This shift in narrative coincided with reduced emphasis on AI safety at major tech companies. OpenAI saw an exodus of safety researchers amid concerns about weakening safety culture. Meanwhile, AI investment reached record levels in 2024, with companies racing to develop and deploy new capabilities. Microsoft has dramatically scaled up its artificial intelligence capabilities by purchasing 485,000 Nvidia Hopper chips in 2024, more than doubling the acquisitions of its closest competitors in the tech industry.

The battle came to a head in California with Senate Bill 1047, which aimed to prevent potential catastrophic harm from advanced AI systems. Despite support from renowned AI researchers Geoffrey Hinton and Yoshua Bengio, Governor Gavin Newsom vetoed the bill after intense opposition from tech industry leaders.

Critics alleged that venture capital firms engaged in misleading campaigns against SB 1047, including claims that it would criminalize software development. The bill's defeat marked a turning point in how policymakers approach AI risks.

The incoming Trump administration has announced plans to repeal Biden's earlier AI executive order, with venture capitalist Sriram Krishnan serving as senior AI adviser. Republican priorities now focus on AI development, military applications, and competition with China rather than catastrophic risks.

Industry leaders like Meta's Yann LeCun have become more vocal in dismissing AI doom scenarios as "preposterous." However, real-world incidents continue to raise safety questions, such as ongoing investigations into AI chatbots' potential role in cases of self-harm.

Looking ahead to 2025, advocates for AI safety regulation face an increasingly challenging landscape. While some legislators like Senator Mitt Romney continue pushing for oversight of long-term AI risks, the tech industry's optimistic vision of AI development appears to have gained the upper hand.