Roblox Tightens Child Safety with Major Social Feature Restrictions

Popular gaming platform Roblox announces sweeping restrictions on social features for users under 13, including limits on chat and unrated content access. These changes, effective November 2023, come amid heightened scrutiny of child safety issues on the platform.

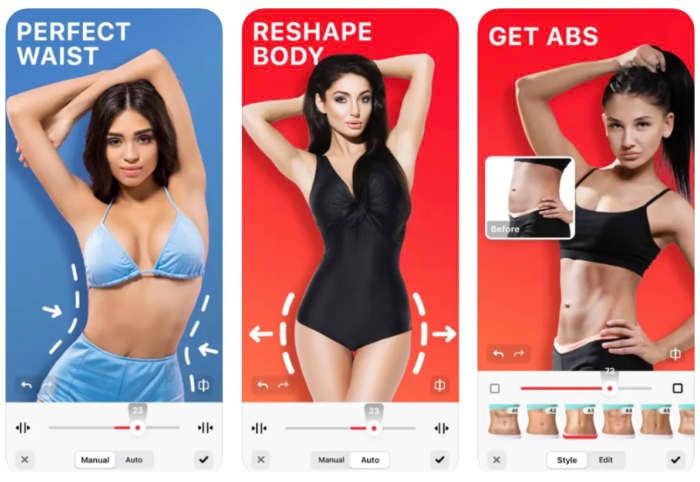

Child Safety Concerns Mount as Apple's App Store Found Hosting Age-Inappropriate Content

Investigation reveals hundreds of apps on Apple's App Store inappropriately rated as child-safe, including AI dating simulators and drug-dealing games. The findings highlight serious content moderation issues and call for independent oversight of age ratings.

Apple Faces $1.2B Lawsuit Over Abandoned Child Safety Scanning Feature

A child abuse survivor is suing Apple for withdrawing its planned CSAM detection system for iCloud, seeking damages exceeding $1.2 billion. The lawsuit represents thousands of victims and challenges Apple's balance between user privacy and child protection measures.

Apple Empowers Kids to Report Nudity in iMessages with iOS 18.2 Update

Apple's iOS 18.2 introduces a child safety feature in Australia, allowing kids to report nude content in iMessages directly to Apple. This update builds on existing safety measures and aligns with new regulations to combat child sexual abuse material online.